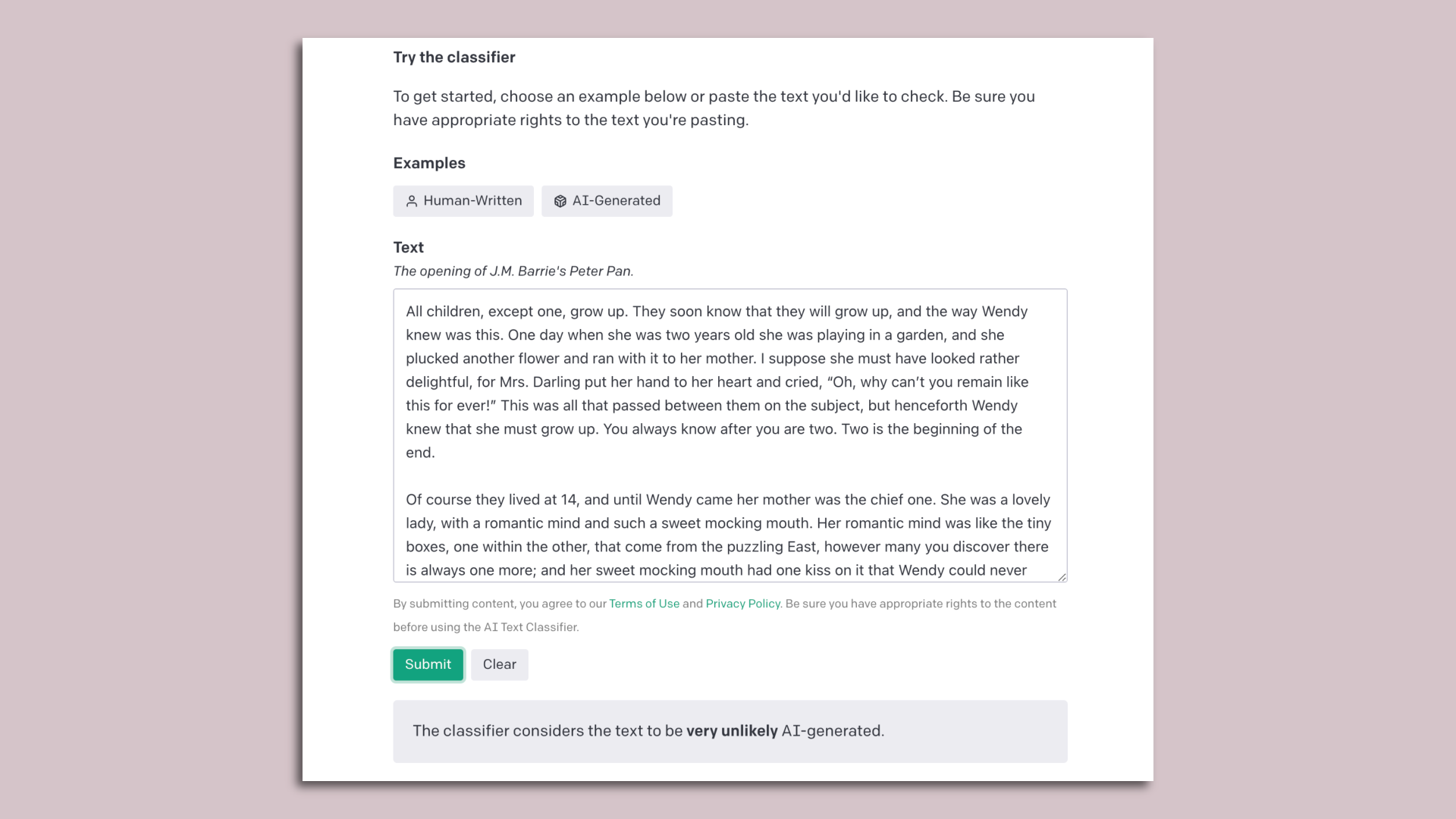

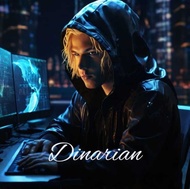

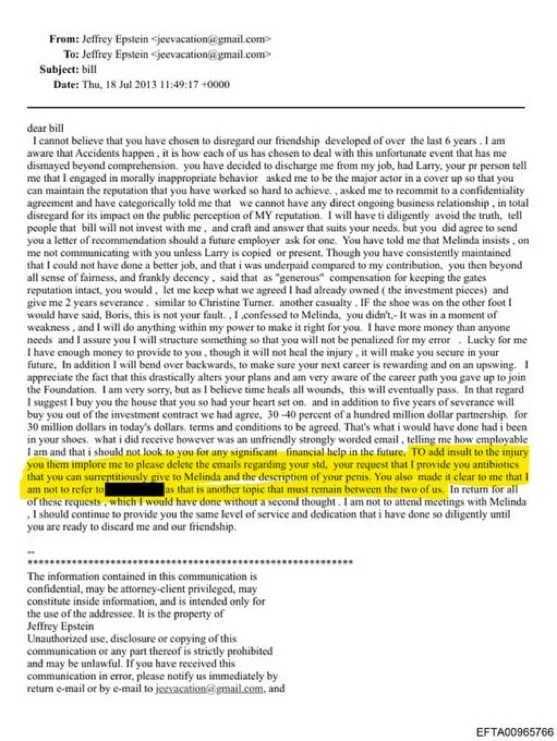

A screenshot of OpenAI's tool for detecting machine-written text. Image: OpenAI

ChatGPT creator OpenAI today released a free web-based tool designed to help educators and others figure out if a particular chunk of text was written by a human or a machine.

Yes, but: OpenAI cautions the tool is imperfect and performance varies based on how similar the text being analyzed is to the types of writing OpenAI’s tool was trained on.

- "It has both false positives and false negatives," OpenAI head of alignment Jan Leike told Axios, cautioning the new tool should not be relied on alone to determine authorship of a document.

How it works: Users copy a chunk of text into a box and the system will rate how likely the text is to have been generated by an AI system.

- It offers a five-point scale of results: Very unlikely to have been AI-generated, unlikely, unclear, possible or likely.

- It works best on text samples greater than 1,000 words and in English, with performance significantly worse in other languages. And it doesn't work to distinguish computer code written by humans vs. AI.

- That said, OpenAI says the new tool is significantly better than a previous one it had released.

The big picture: Concerns are high, especially in education, over the emergence of powerful tools like ChatGPT. New York schools, for example, have banned the technology on their networks.

- Experts are also worried about a rise in AI-generated misinformation as well as the potential for bots to pose as humans.

- A number of other companies, organizations and individuals are working on similar tools to detect AI-generated content.

Between the lines: OpenAI said it is looking at other approaches to help people distinguish AI-generated text from that created by humans, such as including watermarks in works produced by its AI systems.

All while Pfizer—a company with a $2.3 billion criminal fine for fraudulent marketing, bribery, and kickbacks—was given blanket immunity from liability and billions in taxpayer dollars to produce a vaccine in record time with no long-term safety data.

All while Pfizer—a company with a $2.3 billion criminal fine for fraudulent marketing, bribery, and kickbacks—was given blanket immunity from liability and billions in taxpayer dollars to produce a vaccine in record time with no long-term safety data.