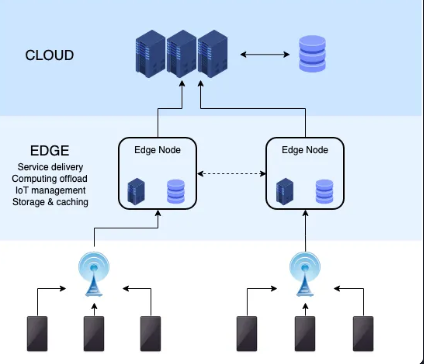

Theta Labs CTO Jieyi Long and the Theta engineering team began researching AI, distributed systems and machine learning applied to blockchain in early 2022 when we invited Zhen Xiao, Professor of Computer Science at Peking University to join Theta as an academic advisor. Prof Xiao has published dozens of research papers in various AI disciplines over the past 20+ years after receiving his Ph.D. in Computer Science from Cornell University and his undergraduate studies at Peking University.

Optimized AI Compute Task Scheduling in EdgeCloud Virtualization Layer

Jieyi co-authored a research paper with Prof Xiao’s team titled “A Dual-Agent Scheduler for Distributed Deep Learning Jobs on Public Cloud via Reinforcement Learning”, published in August 2023 in ACM KDD’23, which is one of the most prestigious international conferences on knowledge discovery and data mining. The research learning and experience has found its way into the implementation of Theta EdgeCloud virtualization layer, and particularly around how AI compute task scheduling algorithms are optimized in a distributed environment.

In summary, the technical paper describes how cloud computing systems use powerful graphics processing units (GPUs) to train complex artificial intelligence models. To efficiently manage these systems, job scheduling is crucial. This involves deciding the order in which tasks are done and when and where they are run on available GPUs, particularly challenging when these GPUs are highly distributed globally. Traditional methods struggle with this complex task, new approaches described in the paper using AI machine learning show promise.

Traditional rule-based and heuristic-based methods often only focus on one aspect of scheduling and don’t consider how tasks and GPUs work together. For instance, they don’t account for differences in GPU performance and competition for resources, which can affect how long tasks take to complete.

To address these challenges, the paper proposes a new approach called a “dual-agent scheduler.” This approach uses two learning agents, namely “Placement Agent” and “Ordering Agent” in the diagram above, to jointly decide task order and GPU placement and more importantly consider how they affect each other. It also introduces a method to understand and manage differences in GPU performance. The proposed approach is tested on real-world data and shows promising results, and improvement in the efficiency of cloud computing systems.

Tree-of-Thought: A New Approach to Solving Complex Reasoning Problems by LLMs powered by EdgeCloud

In May 2023, Jieyi authored an innovative research paper titled “Large Language Model Guided Tree-of-Thought (ToT)” and its preprint is available here. The insights and approach described in this paper by Jieyi could have fundamental implications for improving the reasoning capability of large language models (LLMs) to solve complex problems. The Tree-of-Thought (ToT) technique is inspired by the human mind’s approach for solving complex reasoning tasks through trial and error and through a tree-like thought process, allowing for backtracking when necessary. This insight and inspiration gives rise to the possibility of mirroring the human mind in complex problem solving tasks by large language models.

Since the release of the preprint, the tree-of-thought concept has gained widespread recognition among the LLM community. The paper has already been cited by dozens of research articles, as well as several technical posts including this Forbes article. Separately, LangChain, a highly popular open-source language model library, just officially implemented ToT based on Jieyi’s paper (see here for more details). Coincidentally, just two days after Jieyi uploaded his preprint, a research team from Princeton University and Google DeepMind published another widely cited “Tree of Thoughts” paper with very similar ideas. The ToT concept has inspired a line of influential work to improve LLM reasoning abilities, including Graph of Thoughts, Algorithm of Thoughts, etc. In a Keynote speech at Microsoft Build 2023, Andrej Karpathy from OpenAI commented that the ToT approach allows the LLM to explore different reasoning paths and potentially correct its mistakes or avoid dead ends in its reasoning process, which is similar to how the AlphaGo algorithm uses Monte Carlo Tree Search to explore multiple possible moves in the game of Go before selecting the best one.

The research paper introduces the Tree-of-Thought (ToT) framework, which enhances LLMs for solving mathematical problems like Sudoku. The framework involves adding a prompter agent, a checker module, a memory module and a ToT controller. In order to solve a given problem, these modules engage in a multi-round conversation with the LLM. The memory module records the conversation and state history of the problem solving process, which allows the system to backtrack to the previous steps of the thought-process and explore other directions from there.

To enable the ToT system to develop novel problem-solving strategies not found in its training data, we can potentially adopt the “self-play” technique, inspired by game-playing AI agents like AlphaGo. Unlike traditional self-supervised learning, self-play reinforcement learning allows for a broader exploration of solution space, potentially leading to significant improvements. By introducing a “quizzer” module to generate problem descriptions for training, similar to AlphaGo’s gameplay, the ToT framework can expand its problem-solving capabilities beyond the examples in its training data.

To verify the effectiveness of the proposed technique, we implemented a ToT-based solver for the Sudoku Puzzle. Experimental results show that the ToT framework can significantly increase the success rate of Sudoku puzzle solving. Our implementation of the ToT-based Sudoku solver is available on GitHub.

This work has significant implications to Theta, to be viewed as thought leaders in the AI industry, and more importantly as pioneers of the largest hybrid cloud decentralized AI compute infrastructure. This will enable EdgeCloud to support next-generation LLMs and other AI systems such as LLM powered autonomous agents. Such an agent typically requires running multiple deep-learning models orchestrated by a ToT-enhanced LLM acting as the “brain” of the agent. All these models, including the ToT-enhanced LLM, can potentially be run within EdgeCloud, extending the capabilities of Theta EdgeCloud to support AI systems with ever growing complexity.

EdgeCloud Future — a Decentralized Data Marketplace for Training AI Models

Lastly, Jieyi and team collaborated with Ali Farahanchi, Theta’s lead equity investor, and researchers from USC, Texas A&M, and FedML to co-author “Proof-of-Contribution-Based Design for Collaborative Machine Learning on Blockchain”, published in 2023 IEEE International conference on Decentralized Applications and Infrastructure, with the full paper available here. This research lays the groundwork for future work on Theta EdgeCloud with the opportunity to implement a decentralized data marketplace for training AI models. This novel approach ensures that data contributors are fairly compensated with crypto rewards, training data remains fully private, the system is designed to withstand malicious parties, and verification of data contribution and quality of data using zero-knowledge (ZK) proofs.

This paper can be summarized with the following scenario. Imagine you need to create a new AI model, and you need data and computing power to train it. Instead of collecting all the data yourself, you want to collaborate with others who have data to contribute. But you also want to make sure everyone gets fairly compensated and keep the data private. The system needs to protect against any sneaky attempts to sabotage the model, ensure all computations are accurate, and work efficiently for everyone involved. To achieve this, the paper proposes a data marketplace built on blockchain technology and easy access to a GPU marketplace that is tailored to the needs of an AI developer. Here’s how it works:

Fair Compensation: Trainers are rewarded with crypto based on their contributions to training the model. This ensures everyone gets paid fairly for their input.

Privacy Protection: Data doesn’t need to be moved around, preserving its privacy. Trainers can keep their data secure while still contributing to the model.

Security Against Malicious Behavior: The system is designed to withstand attempts by malicious parties to disrupt or corrupt the model during training.

Verification: All computations in the marketplace, including assessing contributions and detecting outliers, can be verified using zero-knowledge proofs. This ensures the integrity of the process.

Efficiency and Universality: The marketplace is designed to be efficient and adaptable for different projects and participants.

In this system, a blockchain-based marketplace coordinates everything. There’s a special processing node called an aggregator that handles tasks like evaluating contributions, filtering out bad data, and adjusting settings for the model. Smart contracts on the blockchain ensures that everyone follows the rules and that honest contributors get their fair share of the rewards. The researchers have tested and implemented this system’s components and shown how it can be used effectively in real-world situations through various experiments.

In summary, Jieyi and the entire Theta engineering team truly value academic research, experience and how it directly benefits the Theta EdgeCloud platform and products. It is exciting to see how some research concepts like the AI Job scheduler are being rapidly incorporated into the first EdgeCloud release on May 1, while others have longer term implications such as the possibility to build ToT-powered autonomous LLM agents, and a decentralized AI data marketplace for training AI models. In the meantime, Theta is committed to becoming a thought leader in the AI industry by sharing research and releasing core AI building blocks with the wider community starting with Jieyi’s deep research on Tree-of-Thought algorithms.

All while Pfizer—a company with a $2.3 billion criminal fine for fraudulent marketing, bribery, and kickbacks—was given blanket immunity from liability and billions in taxpayer dollars to produce a vaccine in record time with no long-term safety data.

All while Pfizer—a company with a $2.3 billion criminal fine for fraudulent marketing, bribery, and kickbacks—was given blanket immunity from liability and billions in taxpayer dollars to produce a vaccine in record time with no long-term safety data.