In an industry dominated by commercial AI labs, blockchain technology is allowing universities to get more cost-effective access to compute and allowing them to compete.

The rapid advancement of artificial intelligence has created an unprecedented divide between commercial and academic research. While Silicon Valley's tech giants pour billions into developing ever-larger language models and sophisticated AI systems, university labs increasingly find themselves unable to compete. This disparity raises serious questions about the future of AI development and who gets to shape it.

AI Labs are Being Vastly Outspent

In recent years, commercial laboratories have dramatically outspent academic institutions in AI research. In 2021, industry giants spent more than $340 billion globally on AI research and development, dwarfing the financial contributions from governments. For comparison, US government agencies (excluding the Department of Defense) invested $1.5 billion, while the European Commission allocated €1 billion (around $1.1 billion) to similar efforts.

This enormous gap in spending has given commercial labs a clear advantage, especially in terms of access to vital resources like computing power, data and talent. With these assets, companies are leading the development of advanced AI models at a scale that academic institutions struggle to match. Industry AI models are, on average, 29 times larger than those developed in universities, showcasing the stark difference in resources and capabilities.

The sheer size and complexity of these industry-driven models highlight the dominance of commercial labs in the race to develop cutting-edge artificial intelligence, leaving academic research labs trailing far behind.

The reasons for this disparity extend beyond simple economics. While commercial AI labs can operate with long-term horizons and significant risk tolerance, academic researchers must navigate complex grant cycles, institutional bureaucracies and limited budgets.

Perhaps most critically, academic institutions often lack access to the massive computing infrastructure required for cutting-edge AI research. Training large language models can cost millions in computing resources alone – a prohibitive expense for most university departments. This creates a troubling dynamic where potentially groundbreaking research ideas may never see the light of day simply due to the high cost of compute.

This cost is growing exponentially. One study by the Stanford Institute for Human-Centered Intelligence showed that OpenAI’s GPT-3 and Google’s PaLM cost less than $10M to train while the most recent GPT-4 and Google Gemini Ultra cost $78M and $191M respectively. This rate of 10x per year is estimated to persist over the next few years with new foundational models soon costing in the billions.

The 2024 AI Index Report from Stanford HAI reinforces this trend, highlighting the skyrocketing costs of training AI models, the potential depletion of high-quality data, the rapid rise of foundation models and the growing shift towards open-source AI—all factors that further entrench the dominance of well-resourced companies and challenge academic institutions in keeping pace.

However, new solutions are emerging that could help level the playing field. Distributed computing infrastructure, built on decentralized architecture powered by blockchain technology, is beginning to offer researchers alternative paths to access high-performance computing resources at a fraction of traditional costs. These networks aggregate unused GPU computing power from thousands of participants worldwide, creating a shared pool of resources that can be accessed on demand.

On Decentralized Networks

Recent developments in this space are promising. Several major research universities in South Korea, including KAIST and Yonsei University, have begun utilizing Theta EdgeCloud, our decentralized computing network of over 30,000 globally distributed edge nodes, for AI research, achieving comparable results to traditional cloud services at one-half to one-third of the costs. Their early successes suggest a viable path forward for other academic institutions facing similar resource constraints.

The implications extend far beyond cost savings. When academic researchers can compete more effectively with commercial labs, it helps ensure that AI development benefits from diverse perspectives and approaches. University research typically prioritizes transparency, peer review and public good over commercial interests in the form of open-source models and public data sets – values that become increasingly important as AI systems grow more powerful and influential in society.

Consider the current debate around AI safety and ethics. While commercial labs face pressure to rapidly deploy new monetization capabilities, academic researchers often take more measured approaches, thoroughly examining potential risks and societal impacts. However, this crucial work requires significant computational resources to test and validate safety measures and sift through vast amounts of data. More affordable access to computing power could enable more comprehensive safety research and testing.

We're also seeing promising developments in specialized AI applications that might not attract commercial investment but could provide significant societal benefits. Researchers at several universities are using distributed computing networks to develop AI models for ultra-rare disease research, climate science and other public interest applications that might not have clear profit potential.

Openness and Transparency

Beyond the question of resources, academic institutions offer another crucial advantage: transparency and public accountability in their research. While commercial AI labs like OpenAI and Google Brain produce groundbreaking work, their research often occurs within closed environments where methodologies, data sources and negative results may not be fully disclosed. This isn't necessarily due to any misconduct – proprietary technology and competitive advantages are legitimate business concerns – but it does create limitations in how thoroughly their work can be examined and validated by the broader scientific community.

Academic research, by contrast, operates under different incentives. Universities typically publish comprehensive methodologies, open-source their models, share detailed results (including failed experiments) and subject their work to rigorous peer review. This openness allows other researchers to validate findings, build upon successful approaches and learn from unsuccessful ones. When KAIST AI researchers recently developed improvements to Stable Diffusion’s open-source text-to-image generative AI models for virtual clothing e-commerce applications, for example, they published complete technical documentation, public domain training data sets and methodology, enabling other institutions to replicate and enhance their work.

The distributed computing networks now emerging could help amplify these benefits of academic research. As more universities gain access to affordable computing power, we're likely to see an increase in reproducible studies, collaborative projects and open-source implementations. Many South Korean and other universities around the globe are already sharing their AI models and datasets through these networks, creating a virtuous cycle of innovation and verification.

This combination of computational accessibility and academic transparency could prove transformative. When researchers can both afford to run ambitious AI experiments and freely share their results, it accelerates the entire field's progress.

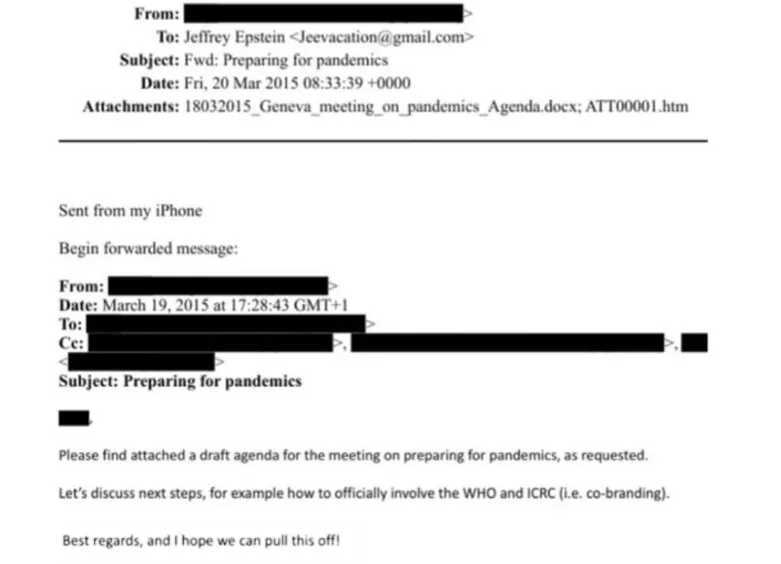

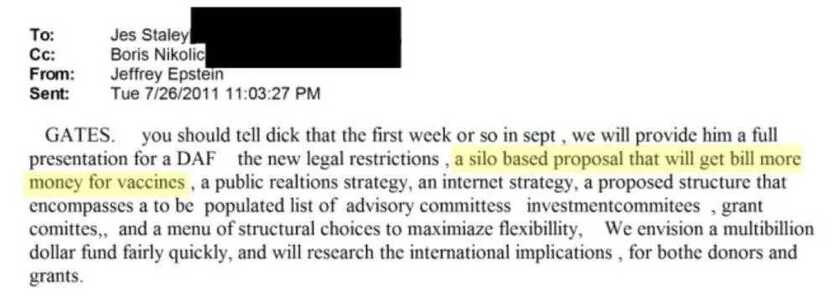

All while Pfizer—a company with a $2.3 billion criminal fine for fraudulent marketing, bribery, and kickbacks—was given blanket immunity from liability and billions in taxpayer dollars to produce a vaccine in record time with no long-term safety data.

All while Pfizer—a company with a $2.3 billion criminal fine for fraudulent marketing, bribery, and kickbacks—was given blanket immunity from liability and billions in taxpayer dollars to produce a vaccine in record time with no long-term safety data.