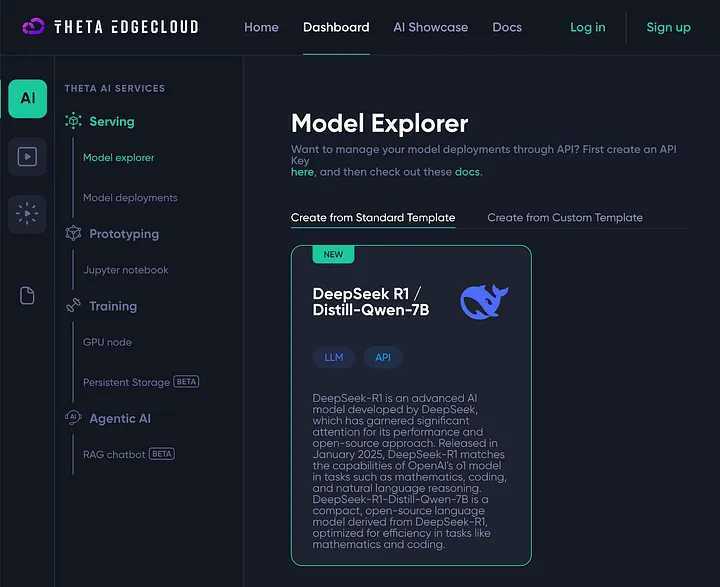

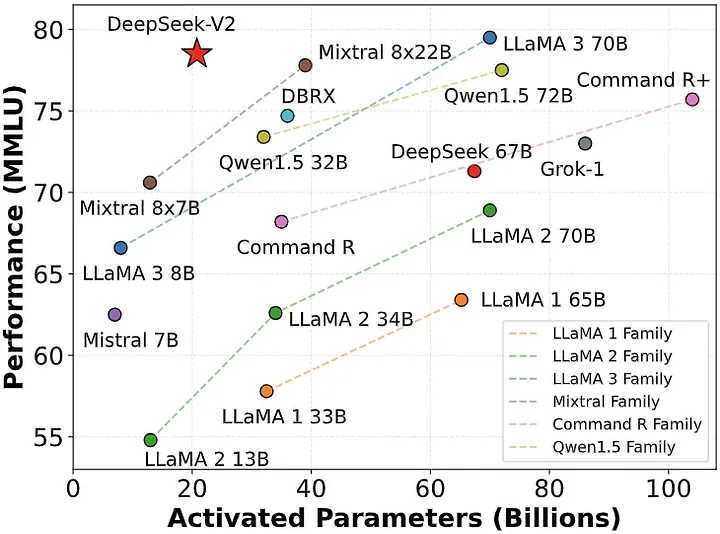

DeepSeek-R1, the newest LLM released by Chinese AI startup DeepSeek, has quickly shaken up the AI landscape by achieving similar performance to leading LLMs from OpenAI (ChatGPT), Mistral (Mixtral) and Meta (LLaMA) despite using a fraction of the resources for both training and inference.

EdgeCloud, the leading decentralized GPU cloud infrastructure, can greatly benefit from new advancements in AI model training and optimization, especially those that make AI leaner and more efficient. EdgeCloud has now added support for DeepSeek-R1 as a standard model template.

We see the combination of innovations like DeepSeek’s lower compute requirements and EdgeCloud’s decentralized infrastructure providing significant competitive advantages:

Cutting-Edge LLMs on Consumer GPUs

One key benefit to a decentralized GPU network such as Theta EdgeCloud is that DeepSeek’s models such as V3 and R1 leverage techniques like multi-head-latent-attention (MLA) and FP8 precision quantization, which significantly reduce memory and computation costs. This makes running cutting-edge large language models (LLMs) on consumer-grade GPUs a possibility.

Moreover, it democratizes access to advanced AI technologies by enabling more developers, researchers, and small-scale enterprises to leverage state-of-the-art models without relying on expensive centralized cloud infrastructures. Pairing this with affordable AI infrastructure like EdgeCloud enables a wider range of developers and academic researchers to drive innovation.

Scalability and Flexibility

Inference tasks for LLMs including DeepSeek R1 could be better scaled on a decentralized GPU network. Since decentralized networks, like Theta EdgeCloud, allow for the addition or removal of GPU nodes with ease, these inference tasks can scale up or down depending on the demands of its AI models. If the models require more processing power, additional nodes can be utilized from the network to handle the increased workload without needing substantial investment in new physical infrastructure. The flexibility of this infrastructure allows DeepSeek R1 and the likes to leverage on-demand computational power, enabling cost-efficient scaling based on the actual needs of the AI task at hand.

Cost Efficiency

By utilizing a decentralized GPU network, Inference tasks with DeepSeek R1 and other models can further lower costs by accessing computational resources from a large pool of distributed GPUs instead of relying on expensive centralized data centers. This approach reduces the need for heavy capital investments in physical hardware, as teams running LLMs can pay only for the compute power used. Decentralized networks like EdgeCloud often use underutilized or excess computational power from devices and nodes that may not be running at full capacity, further driving down costs for DeepSeek.

Energy Efficiency and Sustainability

Instead of running all tasks in a centralized, power-hungry data center, decentralized networks can use resources distributed across a variety of locations, utilizing greener energy sources and avoiding the energy waste associated with running large, centralized data centers. Energy efficiency is a growing concern in AI model training, and a decentralized network like EdgeCloud helps meet sustainability goals while still processing large volumes of data.

Conclusion

In short, the Theta team is excited to continue driving the boundaries of edge computing and decentralized cloud infrastructure for AI and other applications. The EdgeCloud platform allows new AI models like DeepSeek V3/R1 to maximize its cost-effectiveness, reduce latency, enhance scalability, and improve security — while offering a sustainable infrastructure to meet future growing demands of AI processing and training.

All while Pfizer—a company with a $2.3 billion criminal fine for fraudulent marketing, bribery, and kickbacks—was given blanket immunity from liability and billions in taxpayer dollars to produce a vaccine in record time with no long-term safety data.

All while Pfizer—a company with a $2.3 billion criminal fine for fraudulent marketing, bribery, and kickbacks—was given blanket immunity from liability and billions in taxpayer dollars to produce a vaccine in record time with no long-term safety data.